Code Analysis of LangChain AI Agent Implementation

Analysis of codes and designs of agent framework in LangChain

· 5 min read

The AI Agent is an innovative way to harness the power of LLM. It provides additional tools and capabilities that empower the LLMs to perform web searches, retrieve data from databases, and invoke general services, among other functions. LangChain offers a well-designed and adaptable implementation of AI agents.

In this post, I would like to share how the AI agent is implemented and structured in the LangChain project. You can find more information on the LangChain project LangChain.

The Agent Module #

The Agent Definition #

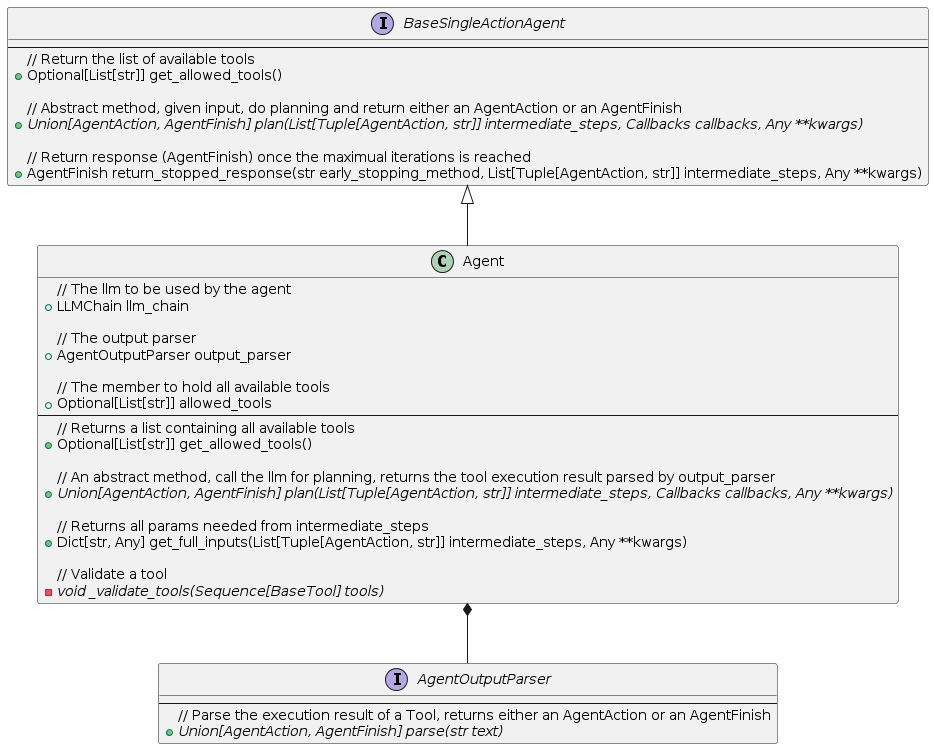

An agent is composed of:

- A

LLMChaintype member represents the large language model to be called. - An output parser to parse the result of tool execution.

- A list to hold all available tools.

The key functionality of an agent is the plan() method, it calls the llm_chain to give the next tool to be executed, then the tool is executed and a result is parsed by the output_parser.

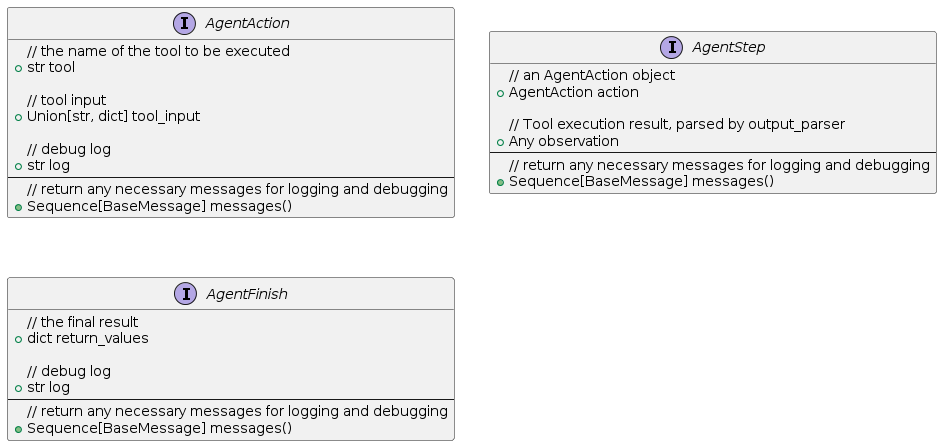

Represent the Action of the Agent #

To represent the tool to be executed by the agent, and to encapsulate any necessary data for the agent to execute a tool, AgentAction, AgentStep, and AgentFinish are provided:

AgentActionrepresents the action to do in the next step which is planned by the llm. To be specific, it is the next tool to be invoked.AgentStepencapsulates the result of tool execution.AgentFinishdenotes the termination of the entire process. The user’s task is considered complete when anAgentFinishobject is returned by the agent.

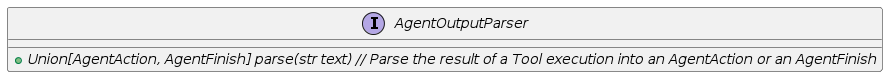

The Output Parser #

The output parse should implement the abstract parse() method which is used to get the result of a tool’s invocation.

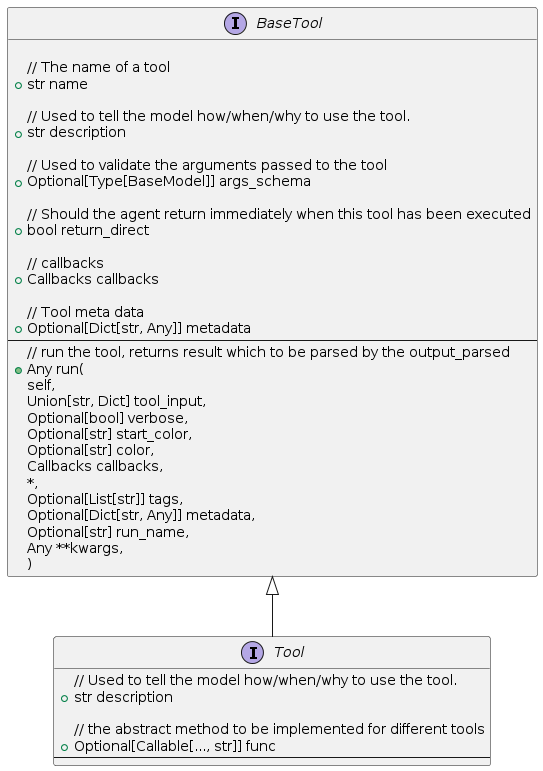

The Tool Module #

The Tool class represents a tool.

The Implementation of AgentExecutor #

LangChain uses the idea AgentExecutor to actually chain together all components: Agent, Tools, OutputParser, etc. The tools are added and managed by the AgentExecutor, the iteration of calling the llm for task planning -> the agent picks up the right tool and runs the tool with the parameters given by the llm -> parse the result and feed it to the llm again is all implemented and controlled by the AgentExecutor.

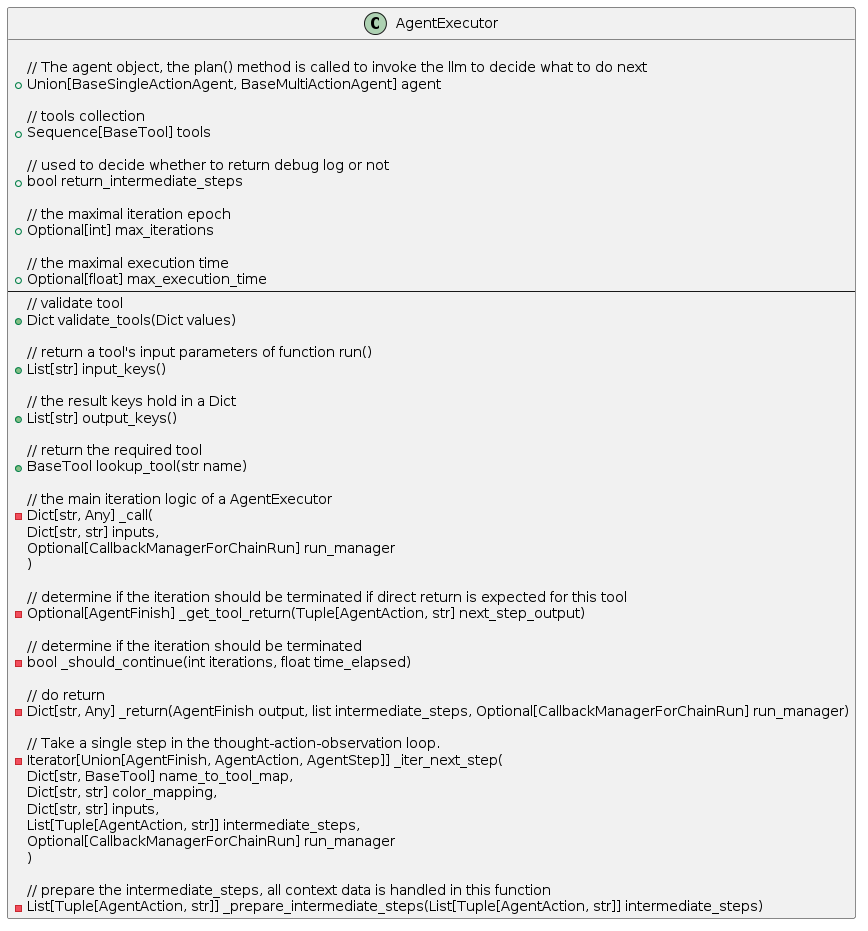

At the first place, let’s see how the AgentExecutor is structured:

As you can see from the uml graph:

- The

AgentExecutorholds theAgentobject, which is used to make decisions by calling the llm (by invoke theplan()method defined by theAgent). Tools are registered toAgentExecutor.- The

max_iterationsandmax_execution_timeare used to control the iteration. - The actual agent execution is defined and implemented in the member function in

AgentExecutor.

And now let’s have a look at how the AgentExecutor works under the hood:

def _call(

self,

inputs: Dict[str, str],

run_manager: Optional[CallbackManagerForChainRun] = None,

) -> Dict[str, Any]:

"""Run text through and get agent response."""

# Construct a mapping of tool name to tool for easy lookup

name_to_tool_map = {tool.name: tool for tool in self.tools}

# We construct a mapping from each tool to a color, used for logging.

color_mapping = get_color_mapping(

[tool.name for tool in self.tools], excluded_colors=["green", "red"]

)

intermediate_steps: List[Tuple[AgentAction, str]] = []

# Let's start tracking the number of iterations and time elapsed

iterations = 0

time_elapsed = 0.0

start_time = time.time()

# We now enter the agent loop (until it returns something).

while self._should_continue(iterations, time_elapsed):

next_step_output = self._take_next_step(

name_to_tool_map,

color_mapping,

inputs,

intermediate_steps,

run_manager=run_manager,

)

if isinstance(next_step_output, AgentFinish):

return self._return(

next_step_output, intermediate_steps, run_manager=run_manager

)

intermediate_steps.extend(next_step_output)

if len(next_step_output) == 1:

next_step_action = next_step_output[0]

# See if tool should return directly

tool_return = self._get_tool_return(next_step_action)

if tool_return is not None:

return self._return(

tool_return, intermediate_steps, run_manager=run_manager

)

iterations += 1

time_elapsed = time.time() - start_time

output = self.agent.return_stopped_response(

self.early_stopping_method, intermediate_steps, **inputs

)

return self._return(output, intermediate_steps, run_manager=run_manager)

Codes explained as follows:

- It initializes an empty list for storing intermediate steps and variables for tracking the number of iterations and time elapsed. The start time is recorded using

time.time(). - The method enters a loop that continues until the

_should_continuemethod returnsFalse. This method checks whether the agent should continue based on the number of iterations and time elapsed. - Within the loop, the

_take_next_stepmethod is called, which performs the next action in the agent’s process. It calls the agent to ask the llm to give the tool to be executed and run the tool. The result should be parsed by theoutput_parsehold by the agent into anAgentStepor anAgentFinish. - If the output of the next step is an instance of

AgentFinish, the_returnmethod is called, which likely finalizes the agent’s process and returns the result. - If the agent hasn’t finished, the output of the next step is added to the intermediate steps. If there’s only one step in the output, the

_get_tool_returnmethod is called to check if the tool should return directly. If it should, the_returnmethod is called. - The number of iterations is incremented, and the time elapsed is updated.

- If the loop ends without returning a result, the

return_stopped_responsemethod of the agent is called, and its output is returned using the_returnmethod.

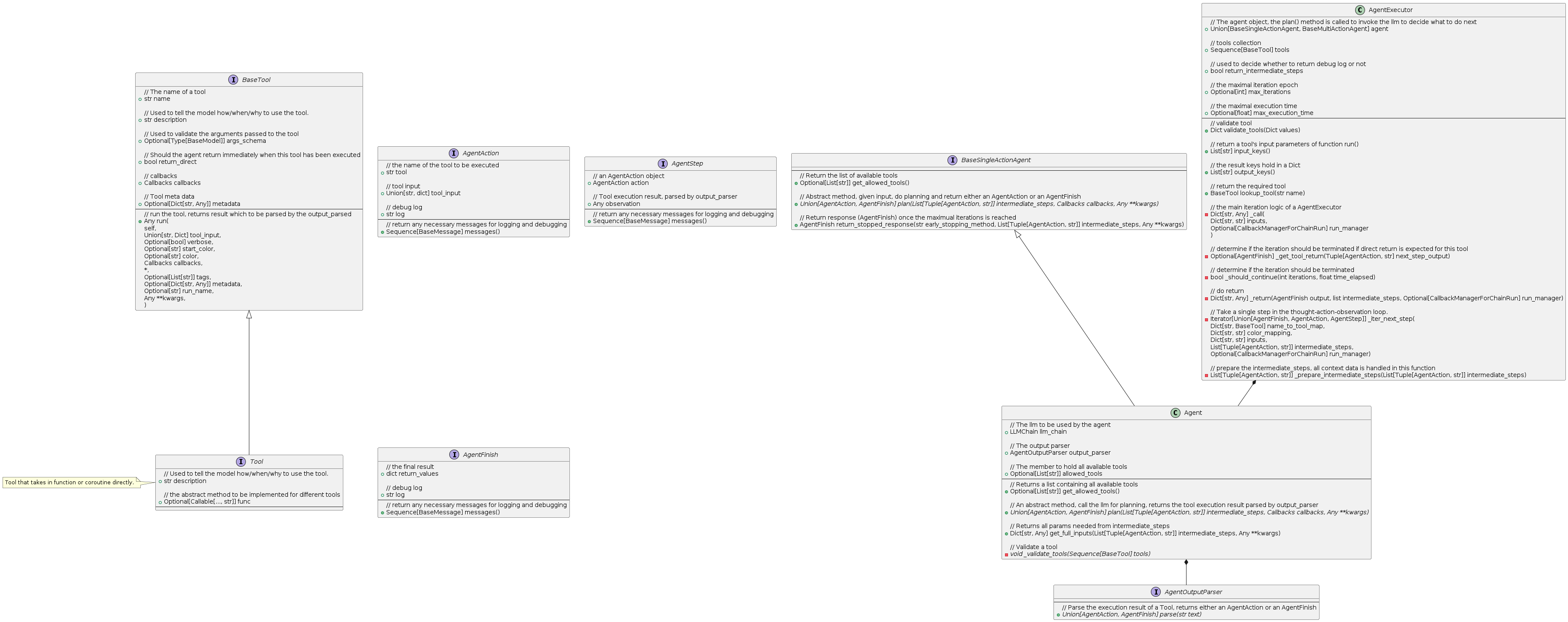

Overall Structure #

Summary #

In this post, we have delved into the implementation details of the LangChain project’s AI agent framework. We have discussed key concepts of an agent, how these components are interconnected, and the code details of the execution iteration.